For about two days now, I’ve been fighting with my new Rancher RKE2-cluster trying to connect it to Azure Arc Kubernetes for easier deployment, and however I’ve tried to turn things I’ve always ended up at the same line:

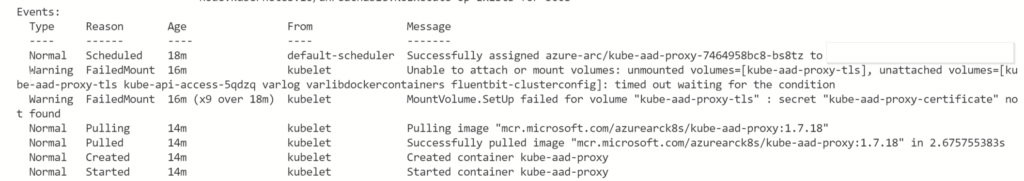

secret "kube-aad-proxy-certificate" not foundOverall the Rancher RKE2-installation has been fantastic, I’ve been using it on Rocky Linux 9 which I’ve been wanting to try out for a while, and everything works brilliantly up until the point that I try to connect the cluster to Azure Arc.

There were exactly two links available on the subject, one was slightly helpful in identifying the Microsoft endpoint where the secret was to be fetched, which ultimately led me down the right path, but overall there doesn’t seem to be much about the topic otherwise.

In short, the kube-aad-proxy service wasn’t able to fetch the required secret from Azure for a connection – which led to the pod failing to start since the secret couldn’t be found.

It’s always DNS

The resolv.conf that Hetzner provides contains four nameservers, two for ipv4 and two for ipv6. Since resolv.conf (mostly) stops processing at #3, this could pose a problem (but usually doesn’t), but this led me down the path of checking whether the core-dns service had provisioned correctly. There were some error messages in the coredns-pods about too many nameservers – but when i entered one of them to try and get some records everything worked fine.

Finally, I started up a blank-ish Ubuntu pod (weibeld/ubuntu-networking) and tried to do some DNS lookups there…. nothing.

Tried deploying it on a couple of different nodes… nothing. Tried on the main control plane node… works. OK, so something clearly went wrong during provisioning the cluster… let’s do it again.

After another reinstallation of the cluster on the control plane nodes, leaving the agent nodes for later, DNS worked. After running the command again, a small moment of frustration as the message returned in the kube-aad-proxy logs, but…

Now, it deployed!

The moral of the story is – whenever something’s broken, it’s always DNS.